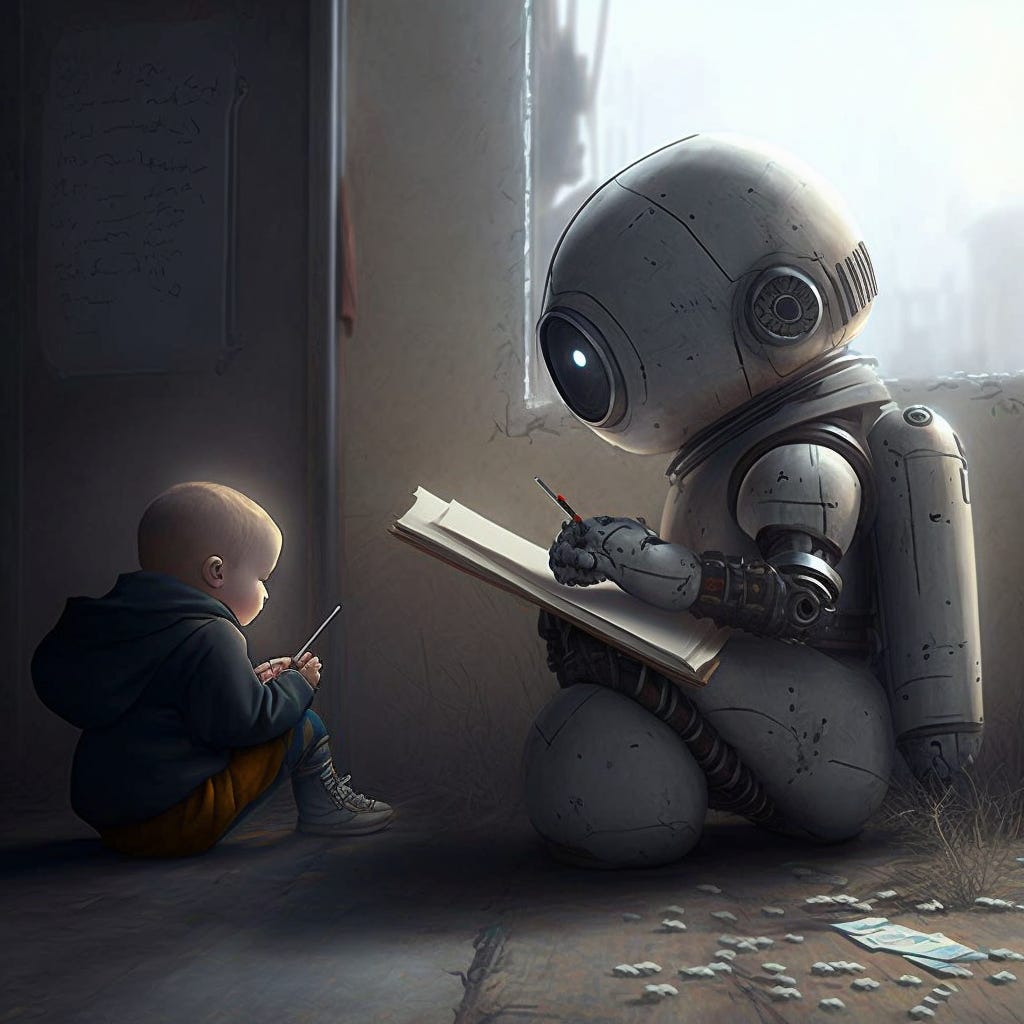

Generative AI: My Enhancement, Your Replacement

A response to Noah Smith and roon's post, "Generative AI: autocomplete for everything."

Today we're up for a challenge.

A couple of weeks ago I read this thought-provoking article co-written by Noah Smith and roon on AI and automation: “Generative AI: autocomplete for everything.”

The thesis they defend, rather eloquently, is that generative AI isn't going to destroy jobs or replace humans to the degree less optimistic peop…

Keep reading with a 7-day free trial

Subscribe to The Algorithmic Bridge to keep reading this post and get 7 days of free access to the full post archives.